Enabling Mobile Robots to Know Where They Can and Cannot Drive

Published:

Robust Matching for More Accurate Feature Correspondences For any indirect, visual SLAM solutions, to estimate the relative camera motion between two consecutive images, it is critical to find ``correct’’ correspondence between features extracted from those images. Given a set of feature correspondents, one can use a n-point algorithm with robust M-estimators, to produce the best estimate to the relative camera pose. The accuracy of a motion estimate is heavily dependent upon the accuracy of the feature correspondence. Such a dependency is even more significant when features are extracted from the images of the scenes with drastic changes in viewpoints and illuminations, and presence of occlusions. To make a feature matching robust to such challenging scenes, we propose a new feature matching method that incrementally chooses a five pairs of matched features for a full DoF (Degree of Freedom) camera motion estimation. In particular, at the first stage, we use our 2-point algorithm to estimate a camera motion, and at the second stage, use this estimated motion to choose three more matched features. In addition, for more accurate outlier rejection, we use, instead of the epipolar constraint, a planar constraint. With this set of five matching features, we estimate a full DoF camera motion with scale ambiguity. Through the experiments with three real-world datasets, our method demonstrates its effectiveness and robustness by successfully matching features 1) from the images of a night market where presence of frequent occlusions and varying illuminations, 2) from the images of a night market taken by a handheld camera and by the Google street view, and 3) from the images of a same location taken daytime and nighttime. Read the following paper to learn more about this work:

- Chih-Chung Chou, YoungWoo Seo, and Chieh-Chih Wang, A two-stage sampling for robust feature matching, Journal of Field Robotics, 35(5): 779-801, 2018.

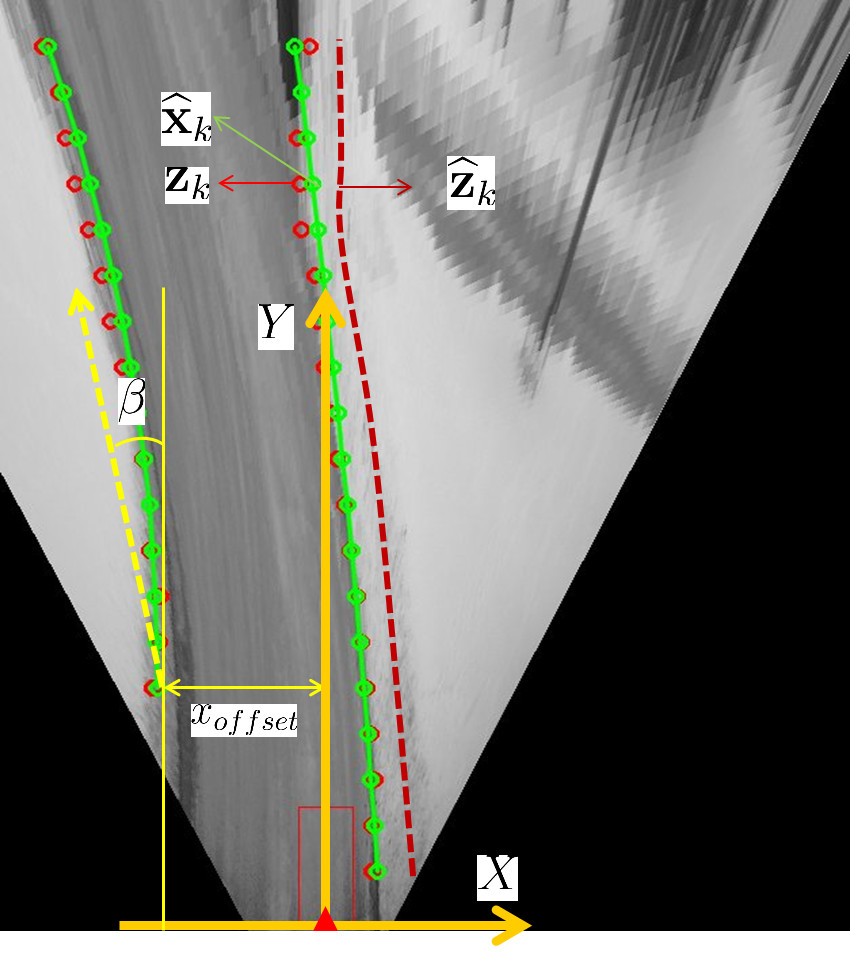

Tracking Traversable Region Bounary This work presents a new method of detecting and tracking the boundaries of drivable regions in road without road-markings. As unmarked roads connect residential places to public roads, a capability of autonomously driving on such roadways is important to truly realize self-driving cars in daily driving scenarios. To detect the left and right boundaries of drivable regions, our method samples the image region at the front of ego-vehicle and uses the appearance information of that region to identify the boundary of the drivable region from input images. Due to the variation in the image acquisition condition, the image features necessary for boundary detection may not be present. When this happens, a boundary detection algorithm working frame-by-frame basis would fail to successfully detect the boundaries. To effectively handle these cases, our method tracks, using a Bayes filter, the detected boundaries over frames. Experiments using real-world videos show promising results. Read the following paper to learn more about this work:

- Young-Woo Seo and Raj Rajkumar, Detection and tracking of boundary of unmarked roads, In Proceedings of the 17th International Conference on Information Fusion (Fusion-2014), Salamanca, Spain, 2014.

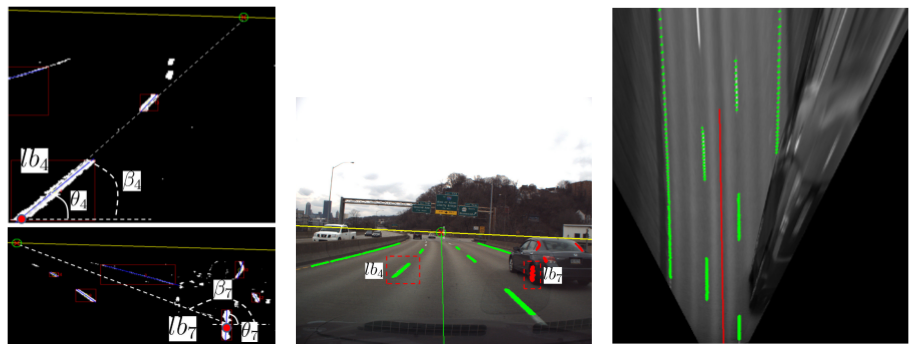

Lanemarking Detection In advanced driver assistance systems and self-driving cars, many computer vision applications rely on knowing the location of the vanishing point on a horizon. The horizontal vanishing point’s location provides important information about driving environments, such as the instantaneous driving direction of roadway, sampling regions of the drivable regions’ image features, and the search direction of moving objects. To detect the vanishing point, many existing methods work frame-by-frame. Their outputs may look optimal in that frame. Over a series of frames, however, the detected locations are inconsistent, yielding unreliable information about roadway structure. This work studys a novel algorithm that, using lines, detects vanishing points in urban scenes and, using Extended Kalman Filter (EKF), tracks them over frames to smooth out the trajectory of the horizontal vanishing point. The study demonstrates both the practicality of the detection method and the effectiveness of our tracking method, through experiments carried out using thousands of urban scene images. Read the following papers to learn more about this work:

- Young-Woo Seo and Raj Rajkumar, Detection and tracking of the vanishing point on a horizon for automotive applications, In Proceedings of the 6th IEEE IROS Workshop on Planning, Perception and Navigation for Intelligent Vehicles (PPNIV-2014), Chicago, IL, Sep 14-18, 2014.

- Young-Woo Seo and Raj Rajkumar, Utilizing instantaneous driving direction for enhancing lane-marking detection, In Proceedings of the 25th IEEE Intelligent Vehicles Symposium (IV-2014), pp. 170-175, Dearborn, MI, 2014.

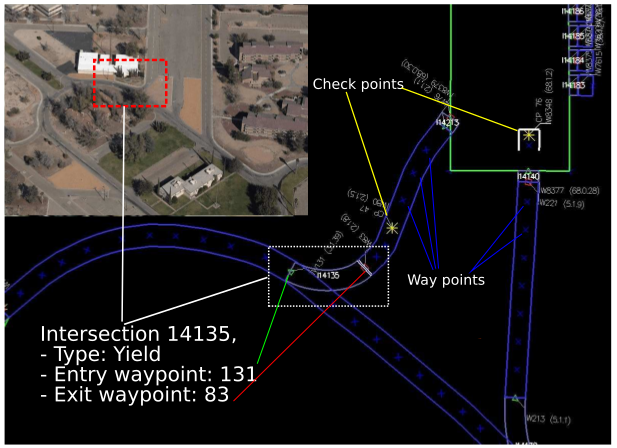

Analyzing Ortho-Images to Generate Lane-Level Maps Maps are important for both human and robot navigation. Given a route, driving assistance systems consult maps to guide human drivers to their destinations. Similarly, topological maps of a road network provide a robotic vehicle with information about where it can drive and what driving behaviors it should use. By providing the necessary information about the driving environment, maps simplify both manual and autonomous driving. The majority of existing cartographic databases are built, using manual surveys and operator interactions, to primarily assist human navigation. Hence, the resolution of existing maps is insufficient for use in robotics applications. Also, the coverage of these maps fails to extend to places where robotics applications require detailed geometric information. To augment the resolution and coverage of existing maps, this work investigates computer vision algorithms to automatically build lane-level detailed maps of highways and parking lots by analyzing publicly available cartographic resources such as orthoimagery. Read the following papers to learn more about this work:

- Young-Woo Seo, David Wettergreen, and Chris Urmson, Exploiting publicly available cartographic resources for aerial image analysis, In Proceedings of the ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems (GIS-2012), pp. 109-118, Redondo Beach, CA, 2012.

- Young-Woo Seo, David Wettergreen, and Chris Urmson, Ortho-image analysis for producing lane-level highway maps, In Proceedings of the ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems (GIS-2012), pp. 506-509, Redondo Beach, CA, 2012.

- Young-Woo Seo, Chris Urmson, David Wettergreen, and Jin-Woo Lee, Building lane-graphs for autonomous parking, In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2010), pp. 6052-6057, Taipei, Taiwan, 2010.

- Young-Woo Seo, Chris Urmson, David Wettergreen, and Rahul Sukthankar, Adapting to intra-class variations using incremental retraining with exploratory sampling, Tech Report CMU-RI-TR-10-36, Oct, 2010.

- Young-Woo Seo, Chris Urmson, David Wettergreen, and Jin-Woo Lee, Augmenting cartographic resources for autonomous driving, In Proceedings of the ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems (GIS-2009), pp. 13-22, Seattle, WA, November, 2009.

- Young-Woo Seo and Chris Urmson, Utilizing prior information to enhance self-supervised aerial image analysis for extracting parking lot structures, In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS-2009), pp. 339-344, St. Louis, MO, October, 2009.

- Young-Woo Seo, Nathan Ratliff, and Chris Urmson, Self-supervised aerial image analysis for extracting parking lot structure, In Proceedings of the Twenty-First International Joint Conference on Artificial Intelligence (IJCAI-2009), pp. 1837-1842, Pasadena, CA, July, 2009.